How to say my name?

You can just say it as “Jao-young Choo”.

😊 No stress about precision — I’m happy with any close version.

I am a first-year PhD student in the Department of Computer Science at University College London (UCL), where I am fortunate to be co-supervised by Prof. He Ye and Prof. Federica Sarro. Previously, I completed my master’s degree at the School of Computer Science and Technology, Huazhong University of Science and Technology (HUST), advised by Prof. Yao Wan. My academic journey has also been enriched by collaborations with Prof. Lingming Zhang at UIUC and Prof. Hongyu Zhang at Chongqing University.

I am also a co-founder of EuniAI, where we are building Prometheus — an open-source AI agent designed to push the boundaries of automated software development.

My research is inspired by the exciting frontiers at the intersection of artificial intelligence and software engineering, with a current focus on multimodal coding agents. I am passionate about building next-generation tools that make code smarter, more accessible, and more trustworthy. For more details about my academic background, please see my CV.

🤝 Let’s Connect: I am always eager to connect and collaborate — whether you share my interests or bring a different perspective from another field. If you’d like to discuss research, exchange ideas, or just say hi, feel free to reach out at zhaoyang.chu.25@ucl.ac.uk, zhaoyang.chu@euni.ai, zychu418@gmail.com.

🌟🌟 Excited to share: I will be in Rio de Janeiro, Brazil for ICSE 2026 next April—looking forward to meeting many of you there! 🤗🤗

🔥 News

- 2025.08: 🎉 Our work on efficient reasoning for R1-style LLMs was accepted to EMNLP 2025 Findings.

- 2025.07: 🎉 Our work on LLM-as-a-Judge for code summarization was accepted by IEEE Transactions on Software Engineering.

- 2025.06: 🎉 Our paper on machine unlearning for code LLMs was accepted to ICSE 2026.

- 2025.05: 🎉 Our research on dynamic code knowledge synchronization for LLMs was accepted to ICML 2025.

- 2025.03: 🎉 Our SANER 2025 paper received the IEEE TCSE Distinguished Paper Award🏆!

- 2025.01: 🎉 Our work on test generation benchmark for LLMs was accepted to NAACL 2025 Findings.

- 2024.12: 🎉 Our study on pre-trained code model selection for reuse was accepted to SANER 2025.

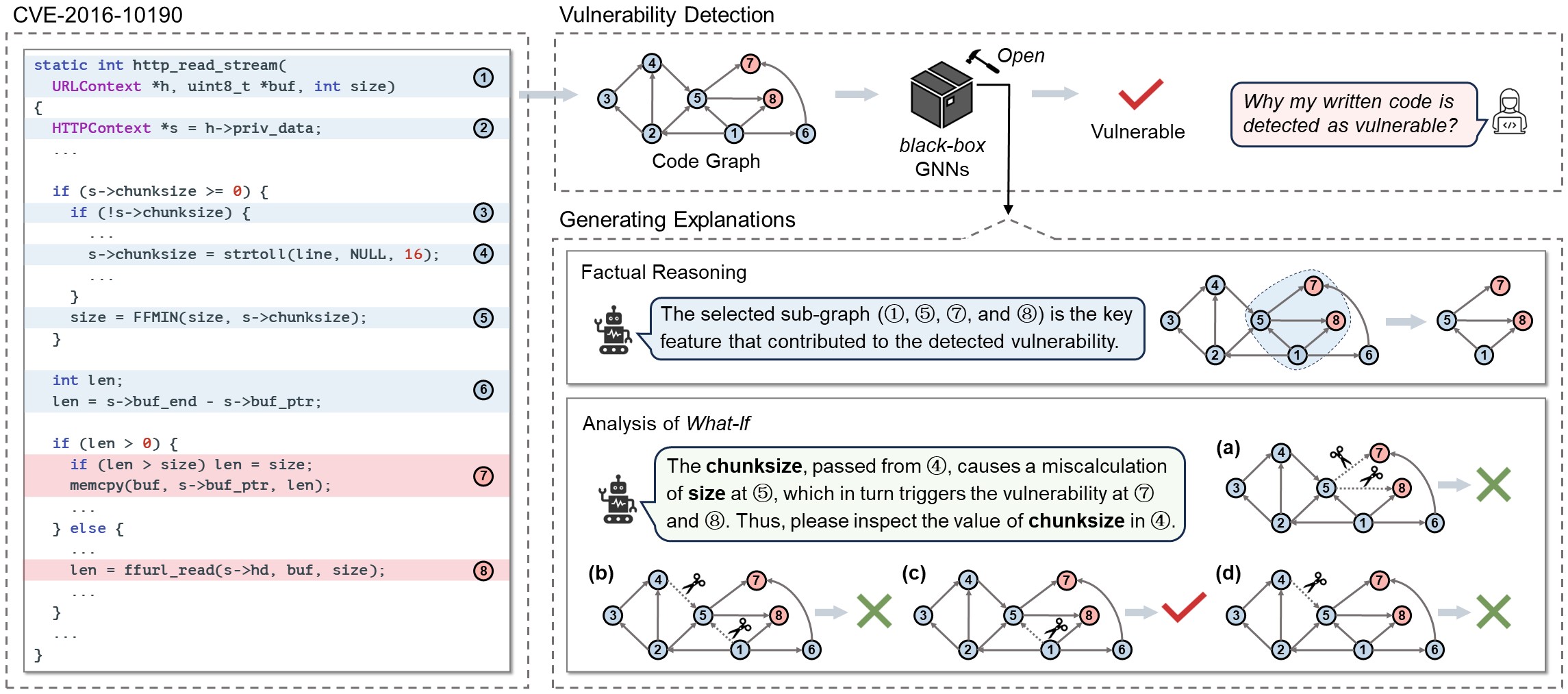

- 2024.03: 🎉 Our research on counterfactual reasoning for GNN-based vulnerability detectio was accepted to ISSTA 2024.

📝 Publications

* indicates equal contribution. † indicates the corresponding author.

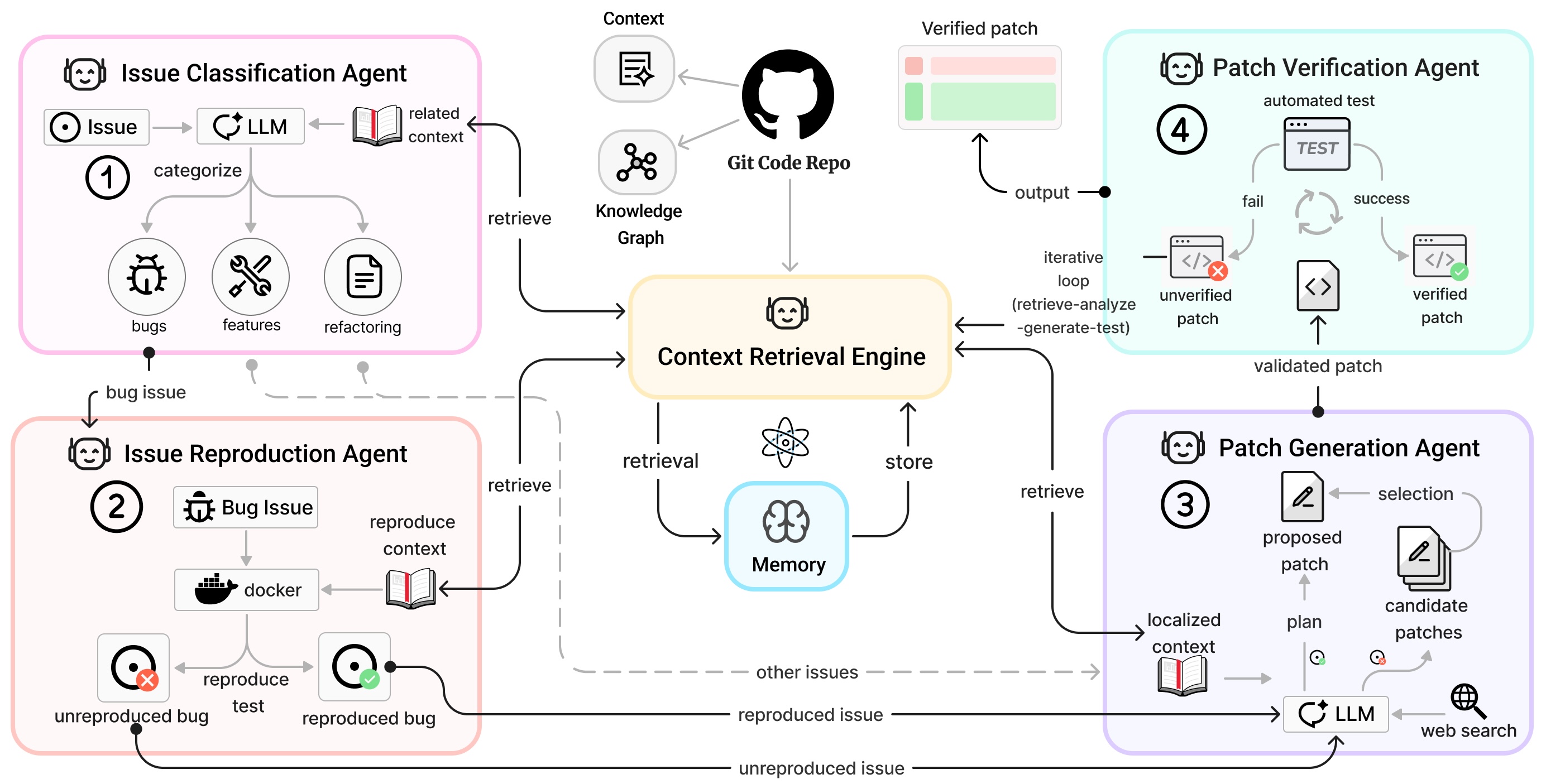

Prometheus: Towards Long-Horizon Codebase Navigation for Repository-Level Problem Solving.

Yue Pan*, Zimin Chen*, Siyu Lu, Zhaoyang Chu, Xiang Li, Han Li, Yang Feng, Claire Le Goues, Federica Sarro, Martin Monperrus, He Ye†.

Preprint.

[ Paper ] [ Code ]

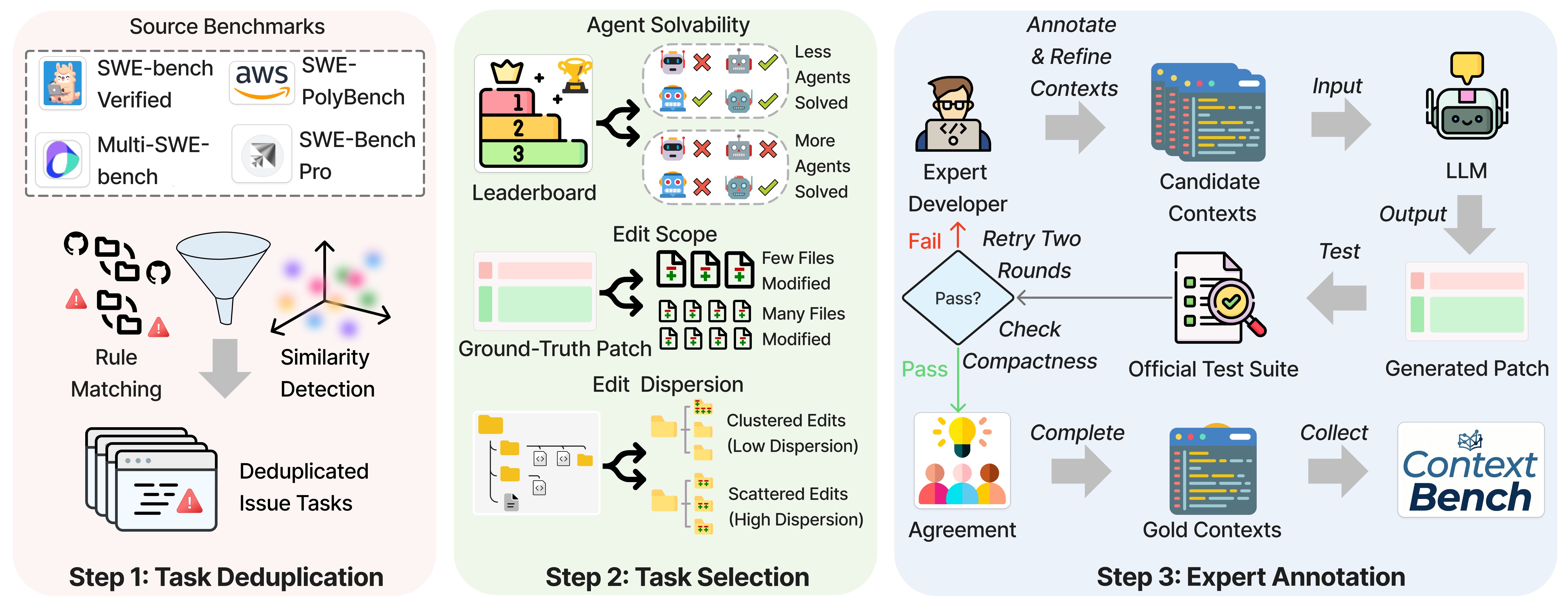

ContextBench: A Benchmark for Context Retrieval in Coding Agents.

Han Li, Letian Zhu*, Bohan Zhang*, Rili Feng*, Jiaming Wang, Yue Pan, Earl T. Barr, Federica Sarro, Zhaoyang Chu†, He Ye†.

Preprint.

[ Homepage ] [ Paper ] [ Code ] [ Dataset ]

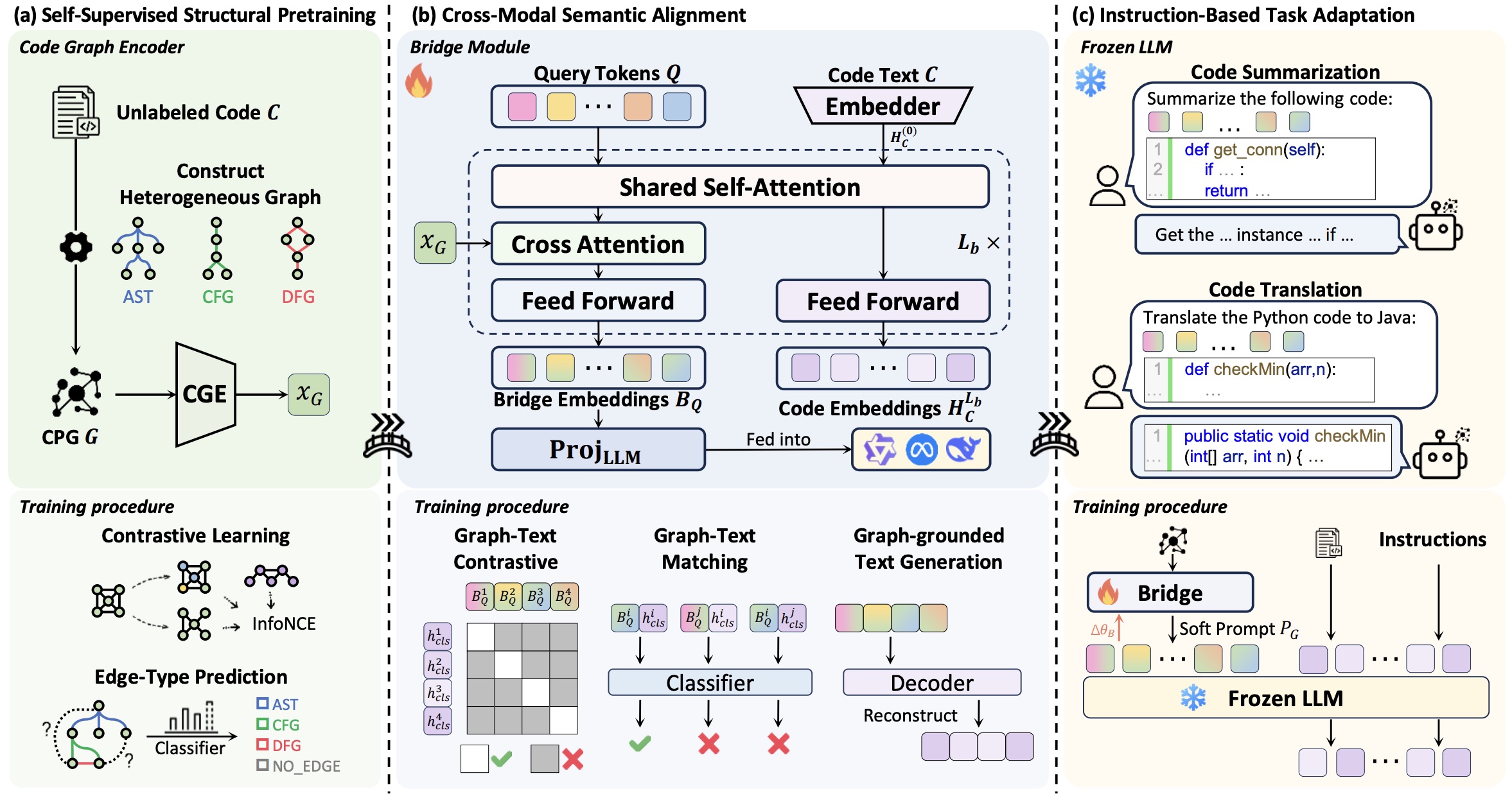

Bridging Code Graphs and Large Language Models for Better Code Understanding.

Zeqi Chen, Zhaoyang Chu, Yi Gui, Feng Guo, Yao Wan, Chuan Shi†.

Preprint.

[ Paper ] [ Code ]

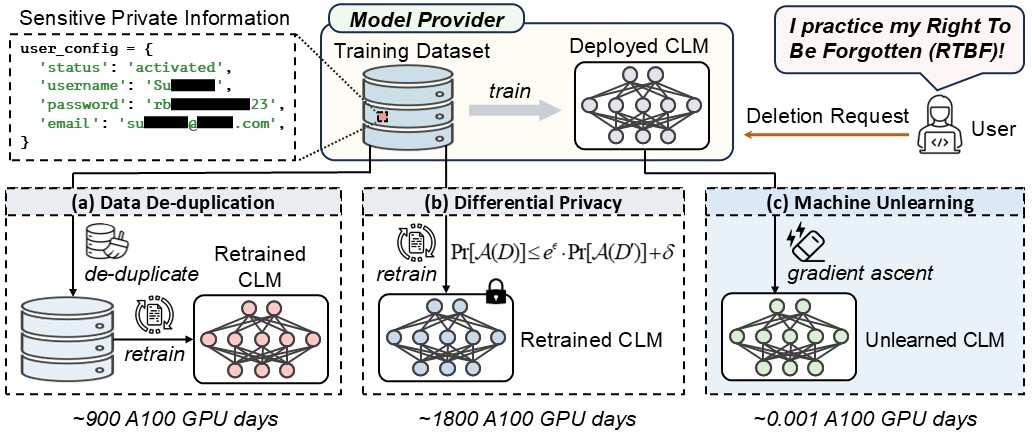

Scrub It Out! Erasing Sensitive Memorization in Code Language Models via Machine Unlearning.

Zhaoyang Chu, Yao Wan†, Zhikun Zhang, Di Wang, Zhou Yang, Hongyu Zhang, Pan Zhou, Xuanhua Shi, Hai Jin, David Lo.

ICSE 2026. The IEEE/ACM International Conference on Software Engineering.

[ Paper ] [ Code ]

CODESYNC: Synchronizing Large Language Models with Dynamic Code Evolution at Scale.

Chenlong Wang*, Zhaoyang Chu*, Zhengxiang Cheng*, Xuyi Yang, Kaiyue Qiu, Yao Wan†, Zhou Zhao, Xuanhua Shi, Dongping Chen.

ICML 2025. International Conference on Machine Learning.

[ Paper ] [ Code ]

How to Select Pre-Trained Code Models for Reuse? A Learning Perspective.

Zhangqian Bi, Yao Wan†, Zhaoyang Chu, Yufei Hu, Junyi Zhang, Hongyu Zhang, Guandong Xu, Hai Jin.

SANER 2025. The IEEE International Conference on Software Analysis, Evolution and Reengineering.

IEEE TCSE Distinguished Paper Award🏆.

[ Paper ] [ Code ]

Can Large Language Models Serve as Evaluators for Code Summarization?

Yang Wu, Yao Wan†, Zhaoyang Chu, Wenting Zhao, Ye Liu, Hongyu Zhang, Xuanhua Shi, Philip S. Yu.

IEEE Transactions on Software Engineering (TSE), 2025.

[ Paper ] [ Code ]

Wait, We Don’t Need to “Wait”! Removing Thinking Tokens Improves Reasoning Efficiency.

Chenlong Wang, Yuanning Feng, Dongping Chen, Zhaoyang Chu, Ranjay Krishna†, Tianyi Zhou†.

EMNLP 2025 Findings. The Conference on Empirical Methods in Natural Language Processing.

[ Paper ]

TESTEVAL: Benchmarking Large Language Models for Test Case Generation.

Wenhan Wang*, Chenyuan Yang*, Zhijie Wang*, Yuheng Huang, Zhaoyang Chu, Da Song, Lingming Zhang, An Ran Chen, Lei Ma.

NAACL 2025 Findings. The Annual Conference of the Nations of the Americas Chapter of the Association for Computational Linguistics.

[ Homepage ] [ Paper ] [ Code ]

Graph Neural Networks for Vulnerability Detection: A Counterfactual Explanation.

Zhaoyang Chu, Yao Wan†, Qian Li, Yang Wu, Hongyu Zhang, Yulei Sui, Guandong Xu, Hai Jin.

ISSTA 2024. The ACM SIGSOFT International Symposium on Software Testing and Analysis.

[ Paper ] [ Code ]

🎖 Honors and Awards

- 2025, IEEE TCSE Distinguished Paper Award.

📖 Educations

- 2025.09 - now, Ph.D., University College London.

- 2022.09 - 2025.06, M.E., Huazhong University of Science and Technology (Graduated with Honors).

- 2018.09 - 2022.06, B.E., Huazhong Agricultural University (Graduated with Honors).